DigitalOcean Kubernetes: Infrastructure Best Practices

This series of articles explores essential factors to take DigitalOcean Kubernetes (DOKS) to Production. Use these articles as a guiding star to achieve production readiness. We also have embedded a carefully curated list of code snippets, templates, and best practices guides in the articles.

Planning your Infrastructure

Kubernetes infrastructure planning is crucial because it sets the foundation for a stable and scalable application deployment platform. With proper planning, you can avoid encountering performance issues, security vulnerabilities, and other problems that can impact the availability of your applications.

The Right Region

The region you select can significantly impact the performance and experience of your applications and ensure compliance with local regulations. Before deploying your workloads, businesses must consider essential factors such as proximity to end-users, regulatory requirements, network latencies, multiple data center locations, and improved redundancy and disaster recovery capabilities.

For example, our newly launched state-of-the-art SYD1 data center has

- Private internet edge and backbone network that provides direct access to Asia, North America, and Europe and reduces dependency on the public internet.

- SYD1 has a high network throughput/capacity of 400 Gbps and is connected to California and Singapore via the lowest latency links available today.

- This makes it ideal for a location for network-intensive workloads running on DigitalOcean Kubernetes.

For more details, refer to the How to choose a data center location for your business guide, which provides valuable insights in helping you decide.

Networking Strategy

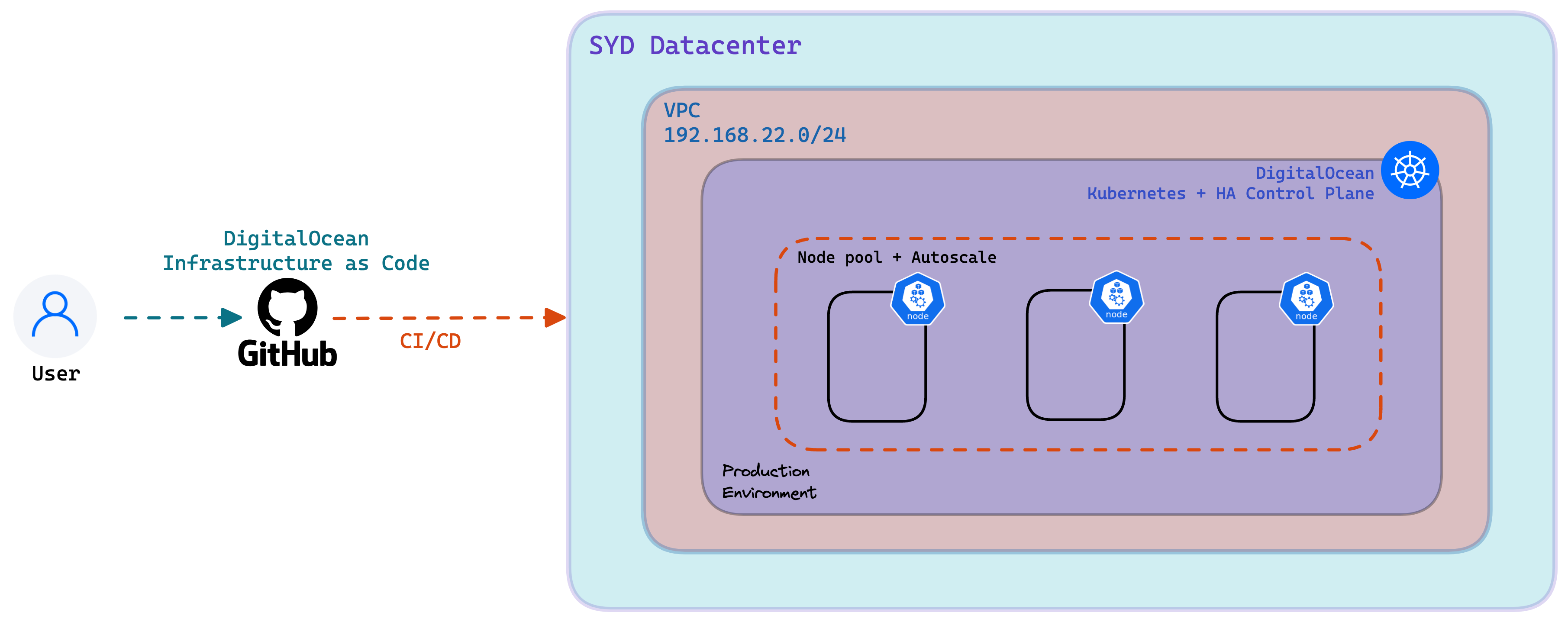

A VPC (Virtual Private Cloud) is a logical isolation of DigitalOcean resources. VPC networks allow you to better secure execution environments, tenants, and applications by isolating resources into networks that the public internet can’t reach.

In DigitalOcean Kubernetes, a VPC creates a private network for your Kubernetes cluster, and one can also easily configure other DigitalOcean resources, such as managed Database clusters in the same VPC. This helps to protect your Kubernetes workloads from unauthorized access. For example, you can create one or more DOKS clusters within the same VPC to establish secure inter-cluster communications.

One essential piece of advice is carefully planning your VPC architecture, considering your specific use case’s security and connectivity needs. You’ll also want to set up network isolation by creating tenants within the VPC network.

For more information, check out.

Kubernetes Infrastructure Design

Cluster Architecture

A common requirement is for a Kubernetes cluster to both scale to accommodate increasing workloads and to be fault-tolerant, remaining available even in the presence of failures (data center outages, machine failures, network partitions). Therefore, one must consider these factors before designing the cluster architecture(control and node planes). Luckily, as a managed Kubernetes service provider, we take away all the burden of setting up a highly resilient, highly available control plane, and the user has to focus more on the node plane architecture design.

DigitalOcean HA Control Plane Architecture

Clusters run fully functional with one control plane node. However, the Kubernetes API may be unavailable during a cluster upgrade or outage of the underlying infrastructure resulting in service downtimes.

We strongly recommend enabling DigitalOcean HA Control Plane for production workloads.

For further reading, check out, How a Kubernetes high availability control plane maximizes uptime and provides reliability which discusses the importance of having a HA Control Plane for your production workloads.

Node Plane Architecture

Determine the specifications for the nodes in your cluster, such as CPU, memory, and storage resources. Then, consider the expected workload, scalability requirements, and specific hardware or software dependencies. You might not get it right the first time, which may take several cycles to achieve the desirable state. DigitalOcean Kubernetes also provides a Cluster Autoscaler (CA) that automatically adjusts the size of a Kubernetes cluster by adding or removing nodes based on the cluster’s capacity to schedule pods.

When you need to write and access persistent data in a Kubernetes cluster, you can create and access DigitalOcean Volumes Block Storage by creating a PersistentVolumeClaim (PVC) as part of your deployment. DigitalOcean Volumes makes RWO (ReadWriteOnce) block storage available to pods. For RWM (ReadWriteMany) block or file-based storage, consider OpenEBS NFS Provisioner, a 1-click app in our Kubernetes Marketplace.

For more insights on choosing suitable node configurations, check out

Platform Automation using GitOps

There are several ways to approach infrastructure automation, but the modern-day best practices are evolved around GitOps. GitOps uses a Git repository as the single source of truth. Similar to how teams use application source code, operations teams that adopt GitOps use configuration files stored as code (infrastructure as code) in a git repository.

Infrastructure as Code

Infrastructure as Code (IaC) should be your strategy to manage the cloud infrastructure, enabling you to automate with GitOps. First, the desired state for cloud infrastructure must be defined using code. This will define all the various infrastructure components and configurations, such as Kubernetes node groups, storage accounts, networking, etc.

We will not dive deep into the importance of IaC here but check out Infrastructure as Code Explained if you are interested.

Infrastructure as Code Tool

Infrastructure as Code (IaC) tools like Terraform, Pulumi, and Crossplane enables organizations to manage and provision their infrastructure using code. These tools automate deploying and managing resources, reducing manual effort and ensuring consistency across environments.

The following official DigitalOcean IaC packages are available for the tools mentioned above:

If you are a beginner and need to learn more about IaC tools like Terraform, we recommend following this tutorial series.

Merge Requests (MRs)

GitOps uses merge requests (MRs) or pull requests (PRs) as the change mechanism for all infrastructure updates. The MR or PR is where teams can collaborate via reviews and comments and where formal approvals occur. A merge commits to your main (or trunk) branch and serves as an audit log or audit trail.

CI/CD

GitOps automates infrastructure updates using a Git workflow with continuous integration and continuous delivery (CI/CD). When new code is merged, the CI/CD pipeline enacts the change in the environment. Any configuration drift, such as manual changes or errors, is overwritten by GitOps automation so the environment converges on the desired state defined in Git.

Here are some examples of GitOps best-practices guide

Security and Testing

Expect an upcoming article dedicated to Kubernetes security best practices in the coming weeks.

- We recommend using aquasecurity/kube-bench to check whether Kubernetes is deployed securely by running the checks documented in the CIS Kubernetes Benchmark. One can incorporate this tool into the CI workflow or run it alone (not recommended).

- Additionally, you can use testing frameworks such as kitchen-terraform and terratest to verify the infrastructure provisioned using Terraform

DOKS IaC Examples

We put together examples that you can test and take inspiration from. Also, please feel free to raise GitHub issues if you find a bug or would like to improve the existing examples. We are always looking to improve our content constantly.

-

K8s-bootstrapper is an extendable framework to set up the production-ready DigitalOcean Kubernetes cluster powered by Terraform and ArgoCD. We will cover the bootstrapper in detail in the following series of blogs.

-

Real-World Example of Production Workloads Running on DOKS: In this project, we walk you through installing Mastodon on the DigitalOcean Kubernetes. You’ll get hands-on with all modern-day best practices around GitOps, and production readiness and seamlessly integrate with our Managed DBaaS, Spaces: S3-Compatible Cloud Object Storage.

Final Thoughts

- It takes thoughtful planning and design to set up a production-grade cloud infrastructure for Kubernetes.

- Planning your infrastructure is as important as building your infrastructure. You may get it wrong the first time, but it can be improved and keeps evolving as your needs grow.

- Spend a good time discussing the networking infrastructure with your engineers and architects. Don’t reinvent the wheel and leave the heavy lifting to managed Kubernetes services like DigitalOcean Kubernetes, which has built-in resiliency and high availability.

- IaC should be your only strategy to manage the cloud infrastructure

- Adopt modern-day best practices such as GitOps for platform automation, as described in this guide.

- Take inspiration from the real-life examples we have put together for you

- Emphasize security (more on the next blog)