How to Monitor PostgreSQL Database Performance

PostgreSQL is an open source, object-relational database built for extensibility, data integrity, and speed. Its concurrency support makes it fully ACID-compliant, and it supports dynamic loading and catalog-driven operations to let users customize its data types, functions, and more.

DigitalOcean Managed Databases include metrics visualizations so you can monitor performance and health of your database cluster.

Cluster metrics monitor the performance of the nodes in a database cluster. Cluster metrics cover primary and standby nodes; metrics for each read-only node are displayed independently. This data can help guide capacity planning and optimization. You can also set up alerting on cluster metrics.

Database metrics monitor the performance of the database itself. This data can help assess the health of the database, pinpoint performance bottlenecks, and identify unusual use patterns that may indicate an application bug or security breach.

View PostgreSQL Metrics

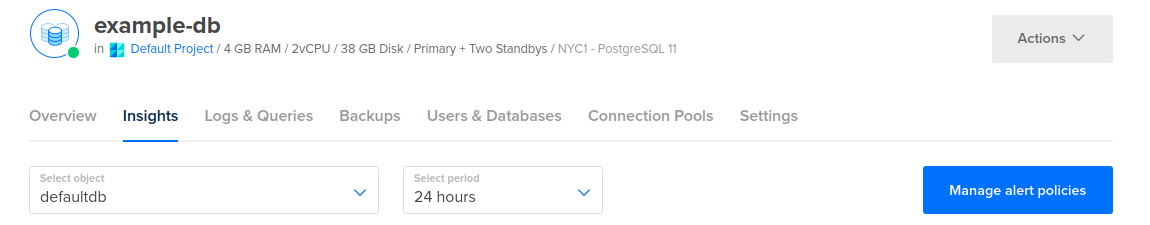

To view performance metrics for a PostgreSQL database cluster, click the name of the database to go to its Overview page, then click the Insights tab.

The Select object drop-down menu lists the cluster itself and all of the databases in the cluster. Choose the database to view its metrics.

In the Select Period drop-down menu, you can choose a time frame for the x-axis of the graphs, ranging from 1 hour to 30 days. Each line in the graphs displays about 300 data points.

By default, the summary to the right shows the most recent metrics values. When you hover over a different time in a graph, the summary displays the values from that time instead.

If you recently provisioned the cluster or changed its configuration, it may take a few minutes for the metrics data to finish processing before you see it on the Insights page.

PostgreSQL Metrics Details

PostgreSQL databases have the following metrics:

- Number of database connections

- Cache hit ratio

- Proportion of index scans over total scans

- Fetch, insert, update and delete throughput

- Rate of deadlock creation

- Replication delay in bytes (if replication is enabled)

If you have 200 or more databases on a single cluster, you may be unable to retrieve their metrics. If you reach this limit, create any additional databases in a new cluster.

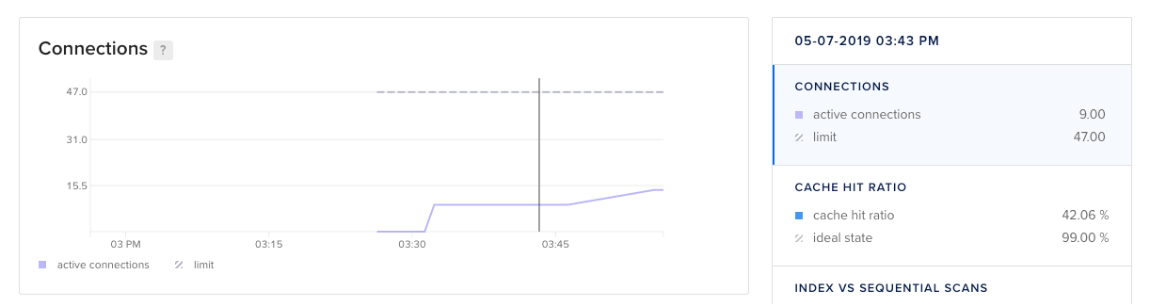

Connections

The connections plot displays the number of client connections to the database and the connection limit as defined by the amount of memory associated with your primary node. Once the limit is reached, new client connections will be blocked until existing ones are closed.

You can use a connection pooling utility to avoid connection contention. Learn more about managing connection pools.

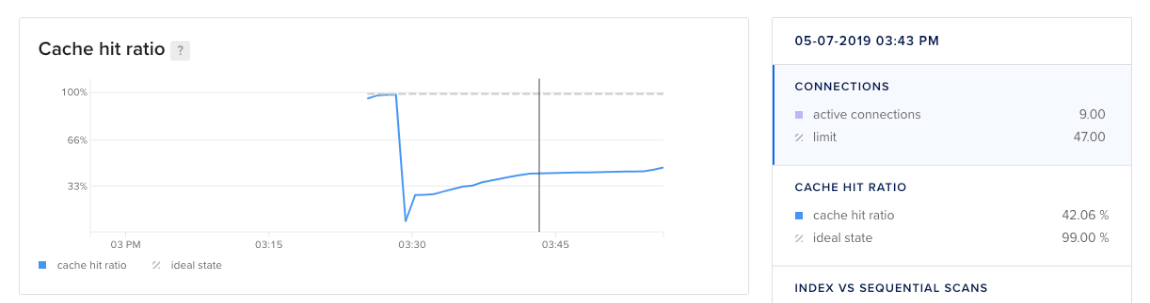

Cache Hit Ratio

The cache hit ratio plot tracks read efficiency as measured by the proportion of reads from cache versus the total reads from both disk and cache. With the exception of data warehouse use cases, an ideal cache hit ratio is 99% or higher, meaning that at least 99% of reads are from cache and no more than 1% are from disk.

If your cache hit ratio is consistently lower than 99%, consider upgrading to a plan with additional memory to increase your cache size.

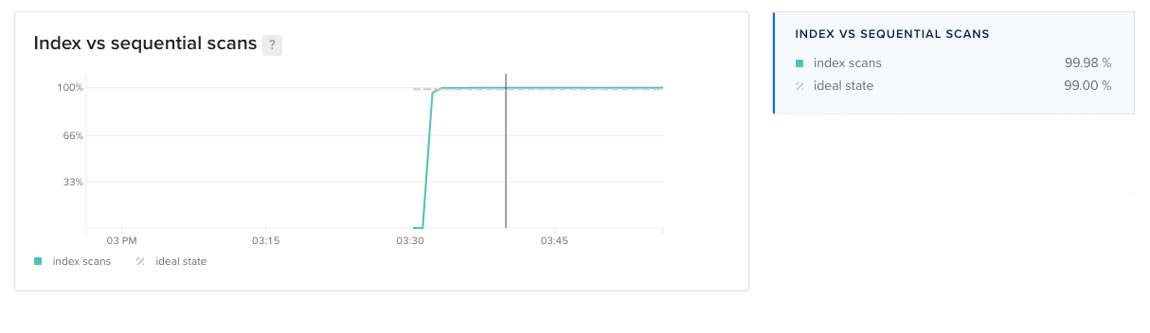

Index vs Sequential Scans

The index vs sequential scans plot displays the percentage of index scans as proportion of all scans, index and sequential, across all user table in the database. Indexes are pointers to table data and make data retrieval more efficient than when using row-based sequential scans. Ideally for read-heavy use cases, the proportion of index scans should be 99% for larger tables.

If you are using larger tables and the proportion of index scans is consistently much lower than 99%, ensure that your larger tables are indexed.

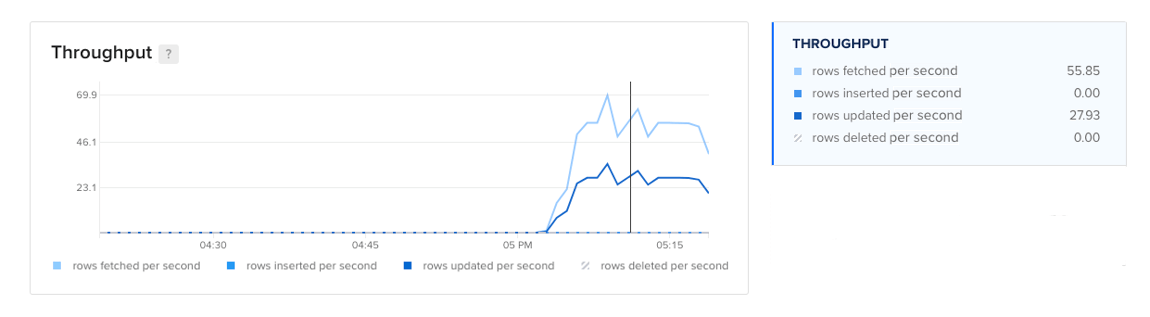

Throughput

The throughput plot records the rate of row fetches, row inserts, row updates, and row deletes across all user tables in the database. Monitoring the overall usage pattern is useful for making tuning decisions and identifying potential problems. For example, unexpected changes in usage patterns could indicate a newly introduced bug or security breach.

This data is also useful for understanding how efficiently your database handles each type of operation and identifying opportunities to improve performance through tuning, design modifications, and scaling. For example, while indexes are helpful for improving performance in read-heavy use cases, they can slow down insert, update and delete (DML) operations.

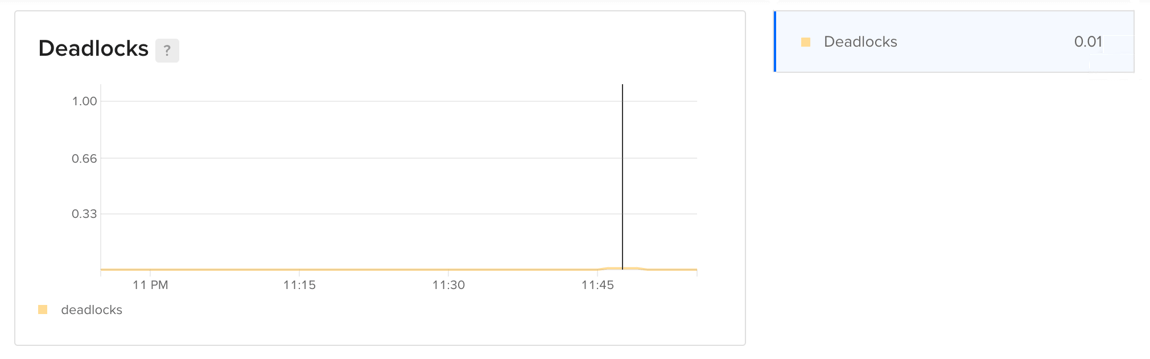

Deadlocks

The deadlocks plot shows the rate of deadlock creation in the database. Deadlocks occur when two or more transactions have simultaneous, conflicting locks on the same database object. PostgreSQL will abort at least one of the deadlocked transactions.

To identify the transactions involved in a deadlock, refer to the deadlock error details in your PostgreSQL logs. Look for log entries with process 12345 detected deadlock. It may also be helpful to correlate the PostgreSQL error timestamp with the same time point in your application logs to understand under what conditions the deadlock was triggered.

You can prevent deadlocks by ensuring that all applications that use the database acquire locks on multiple objects in a consistent order.

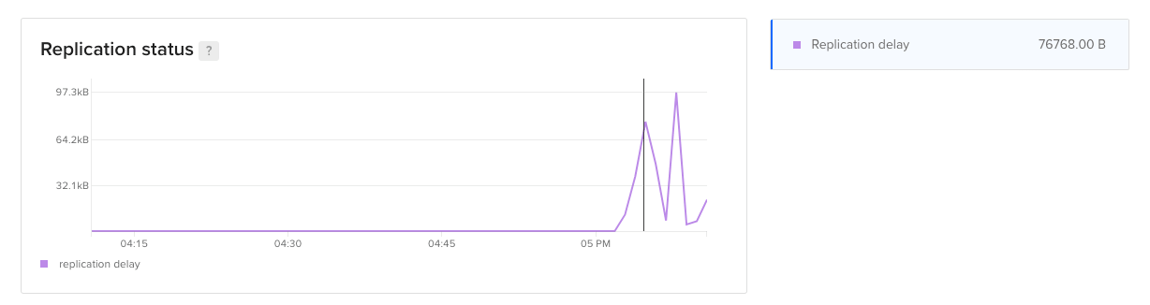

Replication status

If you have a standby node configured, the replication status plot records the lag in replicating data from primary to standby node(s), as expressed in bytes.

Significant replication lags could indicate a network connectivity problem or insufficient CPU resources.

Access the Metrics Endpoint

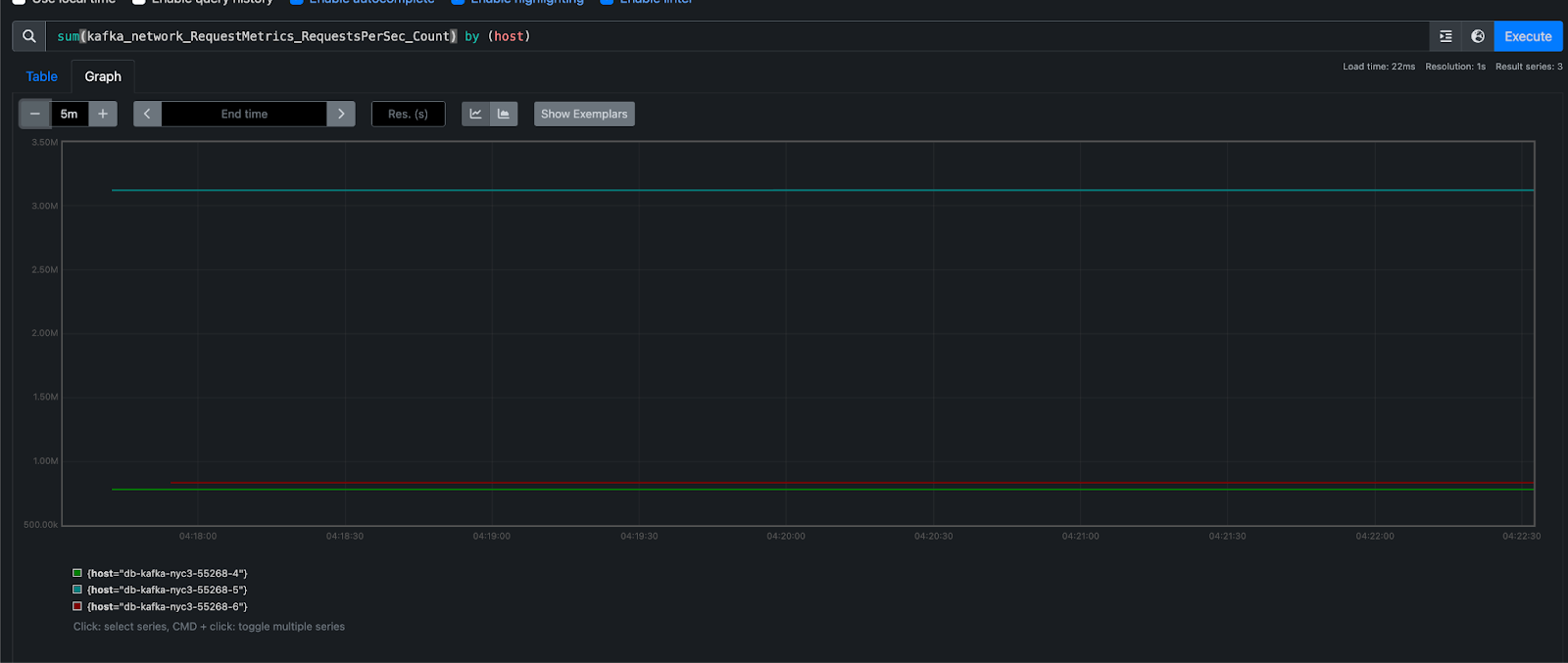

You can also view your database cluster’s metrics programatically via the metrics endpoint. This endpoint includes over twenty times the metrics you can access in the Insights tab in the control panel.

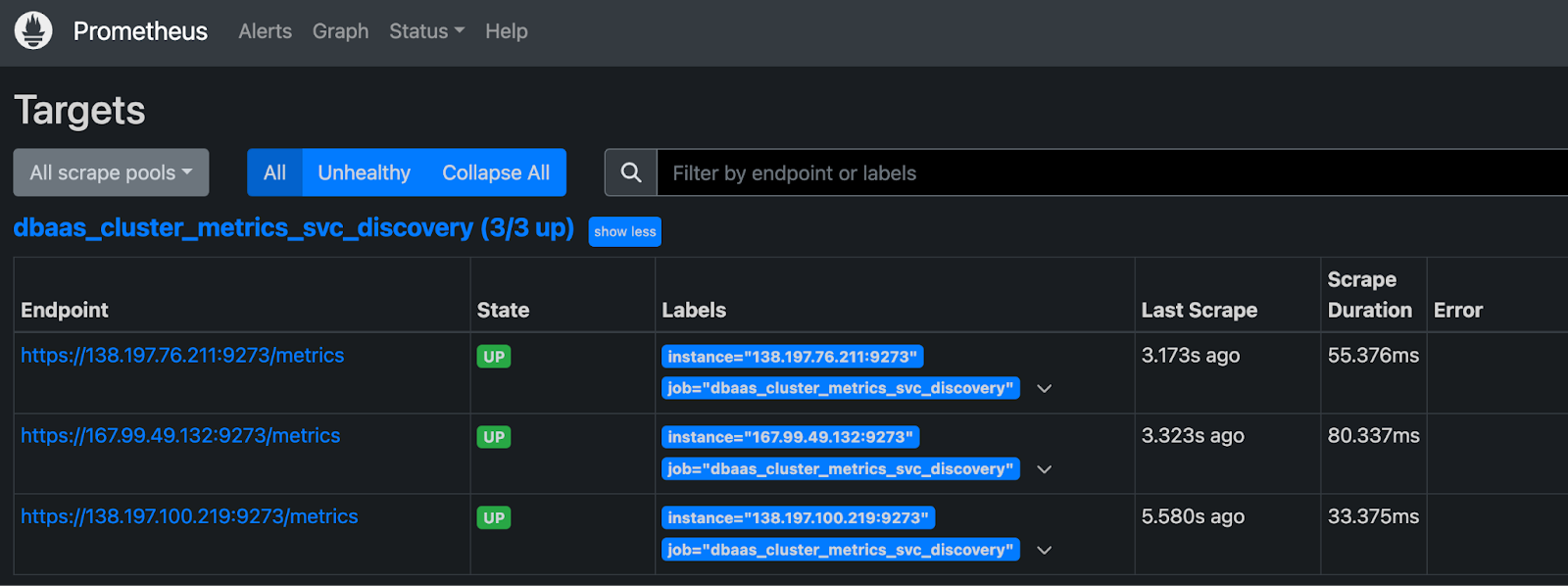

You can access the metrics endpoint with a cURL command or a monitoring system like Prometheus.

Get Hostname and Credentials

First, you need to retrieve your cluster’s metrics hostname by sending a GET request to https://api.digitalocean.com/v2/databases/${UUID}. In the following example, the target database cluster has a standby node, which requires a second host/port pair:

curl --silent -XGET --location 'https://api.digitalocean.com/v2/databases/${UUID}' --header 'Content-Type: application/json' --header "Authorization: Bearer $RO_DIGITALOCEAN_TOKEN" | jq '.database.metrics_endpoints'

Which returns the following host/port pairs:

[

{

"host": "db-test-for-metrics.c.db.ondigitalocean.com",

"port": 9273

},

{

"host": "replica-db-test-for-metrics.c.db.ondigitalocean.com",

"port": 9273

}

]

Next, you need your cluster’s metrics credentials. You can retrieve these by making a GET request to https://api.digitalocean.com/v2/databases/metrics/credentials with an admin or write token:

curl --silent -XGET --location 'https://api.digitalocean.com/v2/databases/metrics/credentials' --header 'Content-Type: application/json' --header "Authorization: Bearer $RW_DIGITALOCEAN_TOKEN" | jq '.'

Which returns the following credentials:

{

"credentials": {

"basic_auth_username": "..."

"basic_auth_password": "...",

}

}

Access with cURL

To access the endpoint using cURL, make a GET request to https://$HOST:9273/metrics, replacing the hostname, username, and password variables with the credentials you found in the previous steps:

curl -XGET --silent -u $USERNAME:$PASSWORD https://$HOST:9273/metrics

Access with Prometheus

To access the endpoint using Prometheus, first copy the following configuration into a file prometheus.yml, replacing the hostname, username, password, and path to CA cert. This configures Prometheus to use all the credentials necessary to access the endpoint:

# prometheus.yml

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'dbaas_cluster_metrics_svc_discovery'

scheme: https

tls_config:

ca_file: /path/to/ca.crt

dns_sd_configs:

- names:

- $TARGET_ADDRESS

type: 'A'

port: 9273

refresh_interval: 15s

metrics_path: '/metrics'

basic_auth:

username: $BASIC_AUTH_USERNAME

password: $BASIC_AUTH_PASSWORD

Then, copy the following connection script into a file named up.sh. This script runs envsubst and starts a Prometheus container with the config from the previous step:

#!/bin/bash

envsubst < prometheus.yml > /tmp/dbaas-prometheus.yml

docker run -p 9090:9090 \

-v /tmp/dbaas-prometheus.yml:/etc/prometheus/prometheus.yml \

prom/prometheus

Go to http://localhost:9090/targets in a browser to confirm that multiple hosts are up and healthy.

Then, navigate to http://localhost:9090/graph to query Prometheus for metrics.

For more details, see the Prometheus DNS SD docs and TLS config docs.

Additional Resources

For more details on each available metric, see the PostgreSQL documentation.