Kubernetes Best Practices

DigitalOcean Kubernetes (DOKS) is a managed Kubernetes service. Deploy Kubernetes clusters with a fully managed control plane, high availability, autoscaling, and native integration with DigitalOcean Load Balancers and volumes. DOKS clusters are compatible with standard Kubernetes toolchains and the DigitalOcean API and CLI.

Kubernetes clusters require a balance of resources in both pods and nodes to maintain high availability and scalability. This article outlines some best practices to help you avoid common disruption problems.

Use Replicas Instead of Bare Pods

We recommend deploying all of your applications in a highly available manner. This means deploying multiple stable replicas of your applications and not using bare pods. Using replicas ensures that a stable set of pods are running your application at any given time.

How do I do this?

Use the replicas field in your application spec to define at least three replicas:

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

labels:

app: guestbook

tier: frontend

spec:

# modify replicas according to your case

replicas: 3

selector:

matchLabels:

tier: frontend

template:

metadata:

labels:

tier: frontend

spec:

containers:

- name: php-redis

image: gcr.io/google_samples/gb-frontend:v3

Size Nodes Appropriately

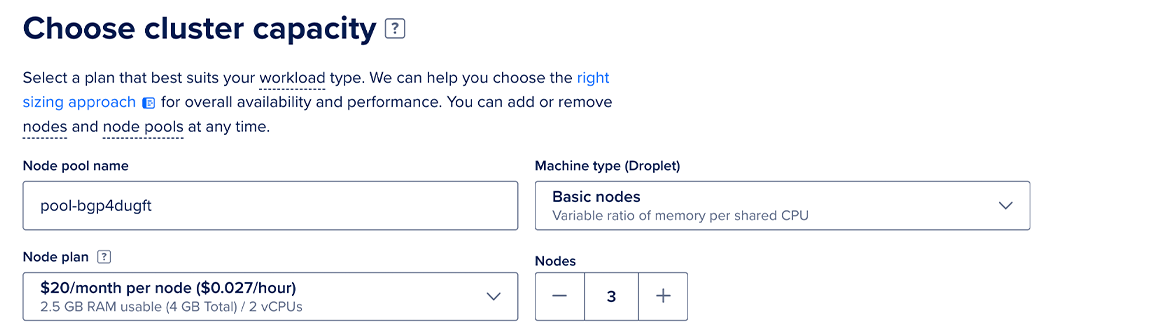

The size of nodes determines the maximum amount of memory you can allocate to pods. Because of this, we recommend using nodes with less than 2GB of allocatable memory only for development purposes and not production. For production clusters, we recommend sizing nodes large enough (2.5 GB or more) to absorb the workload of a down node.

How do I do this?

During cluster creation, choose a plan from the NODE PLAN drop-down menu that fits your project’s purpose. Plans are divided into two categories: Development plans and Production plans.

Size Nodes Pools For High Availability

To further ensure high availability, node pools with production workloads should have at least three nodes. This gives the cluster more flexibility to distribute and schedule work on other nodes if a node becomes unavailable.

How do I do this?

You can specify three or more nodes during a cluster’s creation or you can configure the autoscale function to ensure minimum cluster size of three nodes.

Set Requests and Limits

To keep your cluster running efficiently, we recommend defining the requests and limits objects in your application spec for all deployments.

-

requests- Specifies how much of a resource (such as CPU and memory resources) a pod is allowed to request on a node before being scheduled. If the node doesn’t have the available resources, the pod will not be scheduled. This prevents pods from being scheduled on nodes that are already under heavy workload. -

limits- Specifies the amount of resources (such as CPU and memory resources) a pod is allowed to utilize on a node. This prevents pods from potentially slowing down the work of other pods.

How do I do this?

To set requests and limits, define their values in your application spec. See the Kubernetes’ documentation for more resource types.

apiVersion: v1

kind: Deployment

metadata:

name: frontend

spec:

containers:

- name: app

image: images.my-company.example/app:v4

resources:

requests:

cpu: 250m

memory: 64Mi

limits:

memory: 128Mi

cpu: 500m

- name: log-aggregator

image: images.my-company.example/log-aggregator:v6

resources:

requests:

memory: 64Mi

cpu: 250m

limits:

memory: 128Mi

cpu: 500m

Set Pod Disruption Budgets

To avoid disruptions to your production, such as during cluster upgrades, you can set up a pod disruption budget (PDB) that limits the number of replicated pods that can be down simultaneously. For example, you can have a replica count of 10 and a PDB that allows downtime of three simultaneous replicas by setting the minAvailable to 7.

How do I do this?

To set up a pod disruption budget, you need to create a PodDisruptionBudget policy spec.

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: zk-pdb

spec:

minAvailable: 7

selector:

matchLabels:

app: example-app

You can set the minimum available pods for an application using the minAvailable field and apply it to your applications using the matchLabels object.

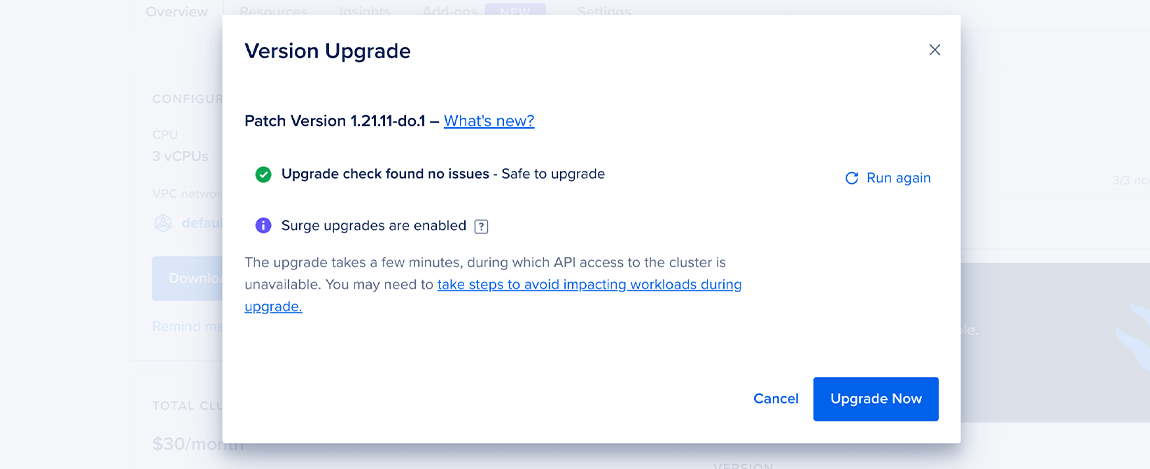

Enable Automatic Upgrades

Enabling automatic upgrades ensures that your cluster is running the latest features, security patches, and stability improvements.

We also recommend enabling surge upgrades when upgrading a cluster.

How do I do this?

To enable automatic upgrades, follow our Kubernetes upgrade guide.

Enable Surge Upgrades

Surge upgrades reduce the overall cluster upgrade time and impact on applications. We recommend enabling surge upgrades when upgrading an existing cluster. Surge upgrades are enabled by default when you create a new cluster.

How do I do this?

To enable surge upgrades, follow our surge upgrade guide.

Check Cluster Linter Messages

Clusterlint is a standalone tool that connects to the cluster’s API server and flags issues with workloads deployed in a cluster. These issues might cause downtime during maintenance or upgrades and could complicate the maintenance or upgrade itself. Using clusterlint regularly can inform you of ongoing issues that otherwise might not be immediately apparent.

How to do this?

You can access clusterlint using three different methods:

- Control panel - To view

clusterlintmessages from the control panel, click Kubernetes in the main navigation menu, then select your cluster from the list of clusters. From the cluster’s Overview page, under Operational Readiness Check, click Run Check. After the card updates, it displays anyclusterlintresults. - DigitalOcean API call - Send a diagnostic request to the DigitalOcean API and then retrieve the results.

- Command line tool - Install

clusterlintfrom the command line and begin using it to access your clusters.

For a list of common clusterlint errors and their respective fixes, see Clusterlint Error Fixes.