How to Enable Cluster Autoscaler for a DigitalOcean Kubernetes Cluster

DigitalOcean Kubernetes (DOKS) is a managed Kubernetes service. Deploy Kubernetes clusters with a fully managed control plane, high availability, autoscaling, and native integration with DigitalOcean Load Balancers and volumes. DOKS clusters are compatible with standard Kubernetes toolchains and the DigitalOcean API and CLI.

DigitalOcean Kubernetes provides a Cluster Autoscaler (CA) that automatically adjusts the size of a Kubernetes cluster by adding or removing nodes based on the cluster’s capacity to schedule pods.

You can enable autoscaling with minimum and maximum cluster sizes either when you create a cluster or enable it later. You can use the DigitalOcean Control Panel or doctl, the DigitalOcean command-line tool for autoscaling.

Enable Autoscaling

Using the DigitalOcean Control Panel

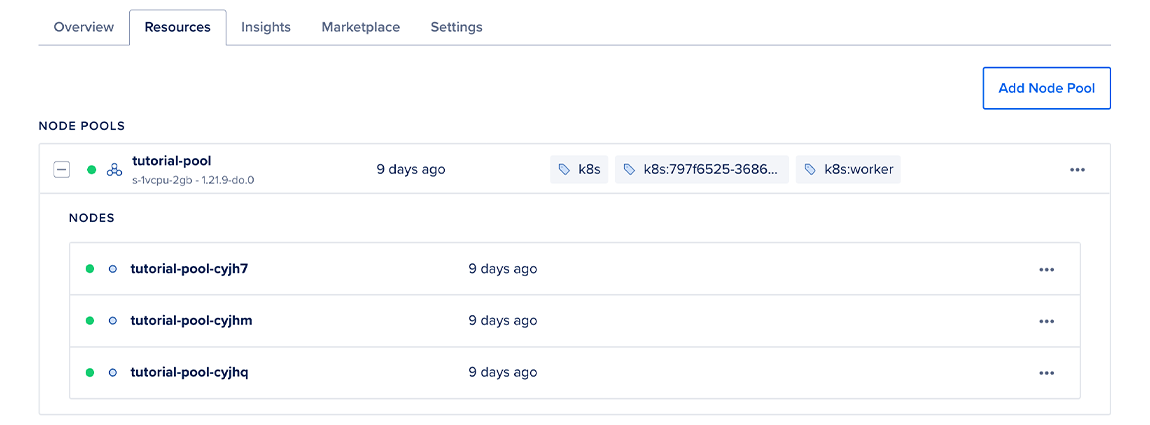

To enable autoscaling on an existing node pool, navigate to your cluster in the Kubernetes section of the control panel/kubernetes/clusters), then click on the Resources tab. Click on the three dots to reveal the option to resize the node pool manually or enable autoscaling.

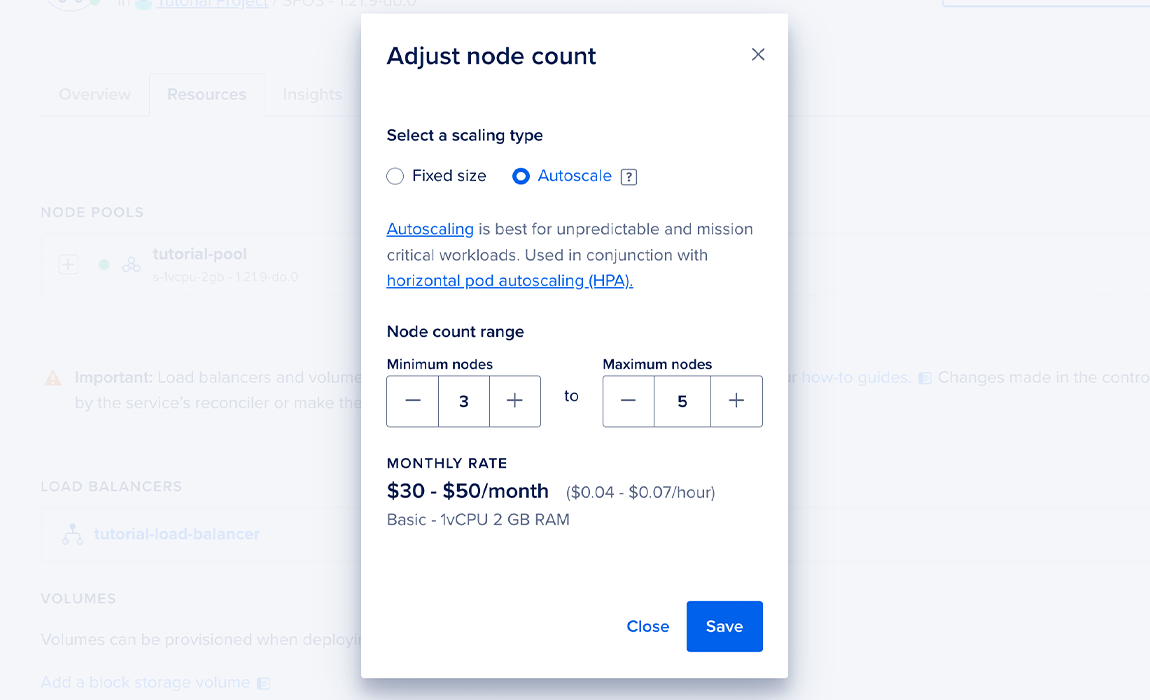

Select Resize or Autoscale, and a modal window opens prompting for configuration details. After selecting Autoscale, you can set the following options for the node pool:

- Minimum Nodes: Determines the smallest size the cluster is allowed to “scale down” to; must be no less than

1and no greater than Maximum Nodes. - Maximum Nodes: Determines the largest size the cluster is allowed to “scale up” to. The upper limit is constrained by the Droplet limit on your account, which is

25by default, and the number of Droplets already running, which subtracts from that limit. You can request to have your Droplet limit increased.

Using doctl

You can use doctl to enable cluster autoscaling on any node pool. You’ll need to provide three specific configuration values:

auto-scale: Specifies that autoscaling should be enabledmin-nodes: Determines the smallest size the cluster is allowed to “scale down” to; must be no less than1and no greater thanmax-nodesmax-nodes: Determines the largest size the cluster is allowed to “scale up” to. The upper limit is constrained by the Droplet limit on your account, which is25by default, and the number of Droplets already running, which subtracts from that limit. You can request to have your Droplet limit increased.

You can apply autoscaling to a node pool at cluster creation time if you use a semicolon-delimited string.

doctl kubernetes cluster create mycluster --node-pool "name=mypool;auto-scale=true;min-nodes=1;max-nodes=10"

You can also configure new node pools to have autoscaling enabled at creation time.

doctl kubernetes cluster node-pool create mycluster mypool --auto-scale --min-nodes 1 --max-nodes 10

If your cluster is already running, you can enable autoscaling on an any existing node pool.

doctl kubernetes cluster node-pool update mycluster mypool --auto-scale --min-nodes 1 --max-nodes 10

Disabling Autoscaling

Using the DigitalOcean Control Panel

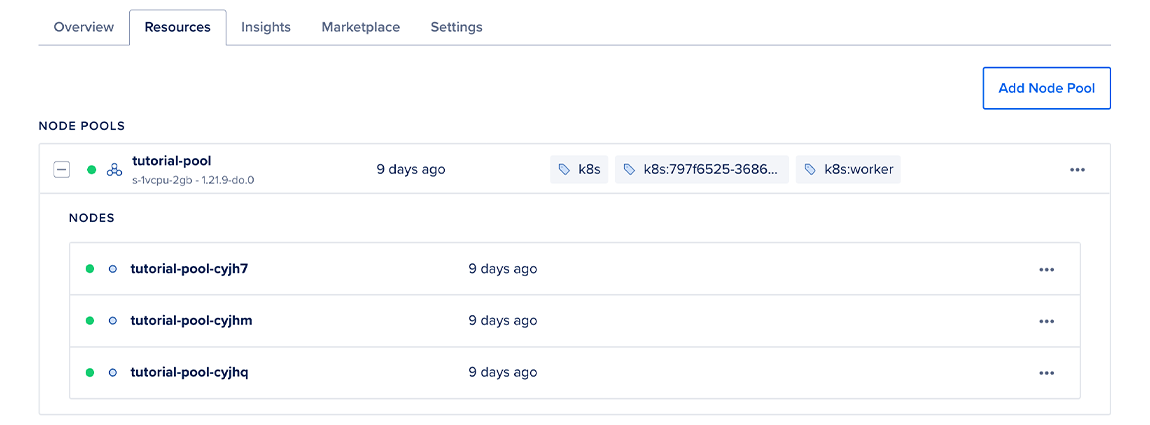

To disable autoscaling on an existing node pool, navigate to your cluster in the Kubernetes section of the control panel, then click on the Resources tab. Click on the three dots to reveal the option to resize the node pool manually or enable autoscaling.

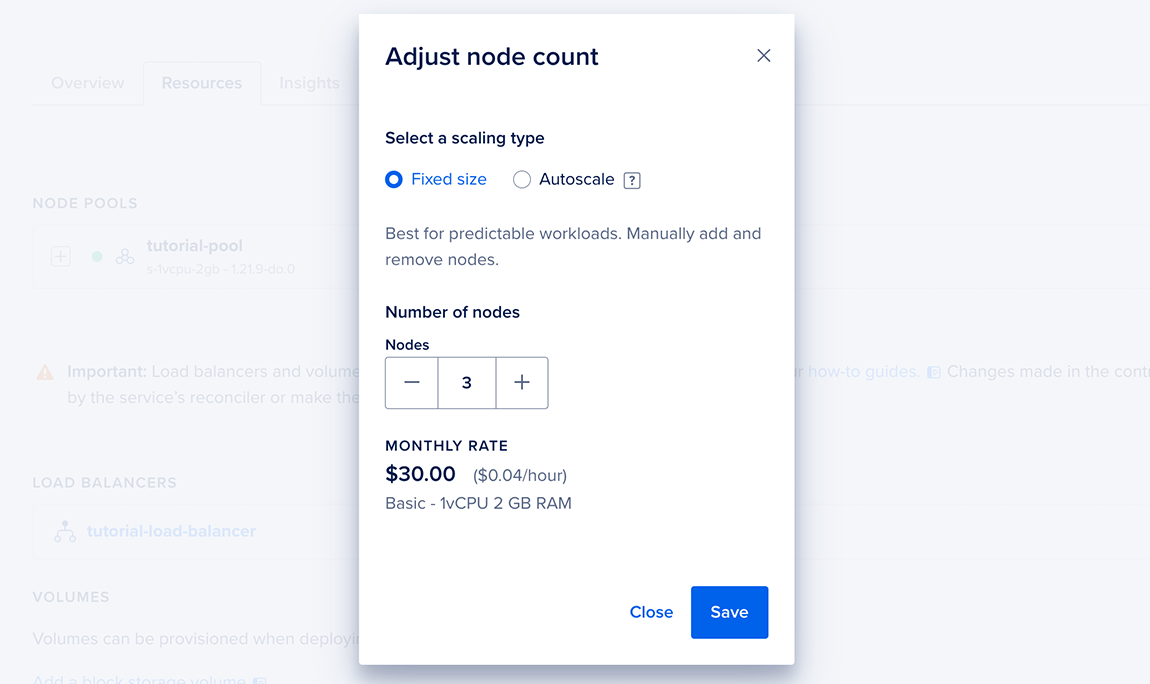

Select Resize or Autoscale, and a modal window opens prompting for configuration details. Select Fixed size and configure the number of nodes you want to assign to the pool.

Using doctl

To disable autoscaling, run an update command that specifies the node pool and cluster:

doctl kubernetes cluster node-pool update mycluster mypool --auto-scale=false

Viewing Cluster Autoscaler Status

You can check the status of the Cluster Autoscaler to view recent events or for debugging purposes.

Check kube-system/cluster-autoscaler-status config map by running the following command:

kubectl get configmap cluster-autoscaler-status -o yaml -n kube-system

The command returns results such as this:

apiVersion: v1

data:

status: |+

Cluster-autoscaler status at 2021-01-27 21:57:30.462764772 +0000 UTC:

Cluster-wide:

Health: Healthy (ready=5 unready=0 notStarted=0 longNotStarted=0 registered=5 longUnregistered=0)

LastProbeTime: 2021-01-27 21:57:30.27867919 +0000 UTC m=+499650.735961122

LastTransitionTime: 2021-01-22 03:11:00.371995979 +0000 UTC m=+60.829277965

ScaleUp: NoActivity (ready=5 registered=5)

LastProbeTime: 2021-01-27 21:57:30.27867919 +0000 UTC m=+499650.735961122

LastTransitionTime: 2021-01-22 19:09:20.360421664 +0000 UTC m=+57560.817703589

ScaleDown: NoCandidates (candidates=0)

LastProbeTime: 2021-01-27 21:57:30.27867919 +0000 UTC m=+499650.735961122

LastTransitionTime: 2021-01-22 19:09:20.360421664 +0000 UTC m=+57560.817703589

...

To learn more about what is published in the config map, see What events are emitted by CA?.

In the case of an error, you can troubleshoot by using kubectl get events -A or kubectl describe <resource-name> to check for any events on the Kubernetes resources.

Autoscaling in Response to Heavy Resource Use

Pod creation and destruction can be automated by a Horizontal Pod Autoscaler (HPA), which monitors the resource use of nodes and generates pods when certain events occur, such as sustained CPU spikes, or memory use surpassing a certain threshold. This, combined with a CA, gives you powerful tools to configure your cluster’s responsiveness to resource demands — an HPA that ensures synchronicity between resource use and the number of pods, and a CA that ensures synchronicity between the number of pods and the cluster’s size.

For a walk-through that builds an autoscaling cluster and demonstrates the interplay between an HPA and a CA, see Example of Kubernetes Cluster Autoscaling Working With Horizontal Pod Autoscaling.

PodDisruptionBudget support

A PodDisruptionBudget (PDB) specifies the minimum number of replicas that an application can tolerate having during a voluntary disruption, relative to how many it is intended to have. For example, if you set the replicas value for a pod to 5, and set the PDB to 1, potentially disruptive actions like cluster upgrades and resizes occur with no fewer than four pods running.

When scaling down a cluster, the DOKS autoscaler respects this setting, and follows the documented Kubernetes procedure for draining and deleting nodes when a PDB has been specified.

We recommend you set a PDB for your workloads to ensure graceful scaledown. For more information, see Specifying a Disruption Budget for your Application in the Kubernetes documentation.